At re:Invent in Las Vegas in December 2019, AWS announced the public preview of RDS Proxy, a fully managed database proxy that sits between your application and RDS. The new service offers to “share established database connections, improving database efficiency and application scalability”.

A first look

In January I shared some thoughts and first results at the AWS User Group Meetup in Berlin and I wrote a post for the Percona Community Blog: A First Look at Amazon RDS Proxy.

One of the key features was the ability to increase application availability, significantly reducing failover times on a Multi AZ RDS instance. Results were indeed impressive.

But a key limitation was that there was no opportunity to change the instance size or class once the proxy has been created. That means it could not be used to reduce downtime during a vertical scaling of the cluster and made the deployment less elastic.

Time for a second look?

Last week AWS announced finally the GA of RDS Proxy and I thought it was a good time to take a second look at the service. Any further improvements in the failover? Can you now change the instance size once the proxy has been created?

Weird defaults?

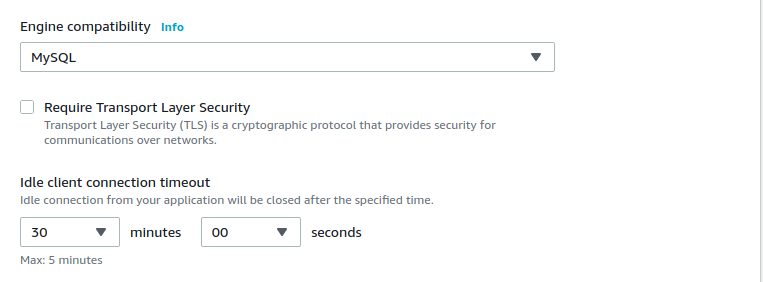

One of the first and few values you should choose when you set up an Amazon RDS Proxy is it the idle client connection timeout. It is already hard to figure out the optimal value in an ideal scenario. But having a user interface that suggests a default of 30 minutes with a label that states “Max: 5 minutes” makes it more difficult. Almost all if the drop down list let you set any value up to 1 hour.

Let us play!

I created again a test-rds and a test-proxy and I decided to perform the very same basic tests I did last December. I started two while loops in Bash, relying on the MySQL client, each one asking every 2 seconds the current date and time to the database:

$ while true; do mysql -s -N -h test-proxy.proxy-***.eu-central-1.rds.amazonaws.com -u testuser -e "select now()"; sleep 2; done

$ while true; do mysql -s -N -h test-rds.***.eu-central-1.rds.amazonaws.com -u testuser -e "select now()"; sleep 2; done

Both return the same results:

2020-07-04 20:24:12

2020-07-04 20:24:14

2020-07-04 20:24:16

2020-07-04 20:24:18

So far so good. I then trigger a reboot with failover of the test-rds instance. What is the delay on the two endpoints?

test-proxy

2020-07-04 20:24:56

2020-07-04 20:24:58

2020-07-04 20:25:20

2020-07-04 20:25:22

test-rds

2020-07-04 20:24:56

2020-07-04 20:24:58

2020-07-04 20:27:12

2020-07-04 20:27:14

The difference between the test-proxy and the test-rds is significant: it takes 132 seconds for the RDS endpoint to recover versus only 20 seconds for the proxy. Amazing difference and even better than what AWS promises in a more reliable and significant test.

But what happens when I trigger a change of the instance type?

While the numbers for the test-rds do not change significantly, the proxy is simply gone. Once the database cluster behind changes, the proxy endpoint is still available but it does not connect to the database anymore. Changing time out does not help, with no simple way to recover.

test-proxy

ERROR 9501 (HY000) at line 1: Timed-out waiting to acquire database connection

ERROR 9501 (HY000) at line 1: Timed-out waiting to acquire database connection

ERROR 9501 (HY000) at line 1: Timed-out waiting to acquire database connection

ERROR 9501 (HY000) at line 1: Timed-out waiting to acquire database connection

ERROR 9501 (HY000) at line 1: Timed-out waiting to acquire database connection

As for today, at least for MySQL 5.7 on RDS, introducing the proxy in the architecture makes the environment less elastic. As you have no option anymore to introduce any manual or automatic (vertical) scaling of the database to match traffic. Any change to the database becomes more problematic.

Anything else? There are a few other well documented limitations still present, including the lack of support for MySQL 8.0.

A final recap

Amazon RDS Proxy is a very interesting service. And it could be an essential component in many deployments where increase application availability is critical. But I would have expect a few more improvements since the first preview. The lack of support for changes of the instances makes it still hard to integrate it in many scenarios where RDS is currently used.