Cloud architect with a strong focus on AWS, data storage, MySQL and cloud cost optimization

AWS Data Hero

Recognized expert on AWS database technologies.

InfoQ Editor

Covering the latest news about cloud computing.

Public Speaker

Speaking and sharing knowledge at international events.

dev.to

AWS is my playground.

-

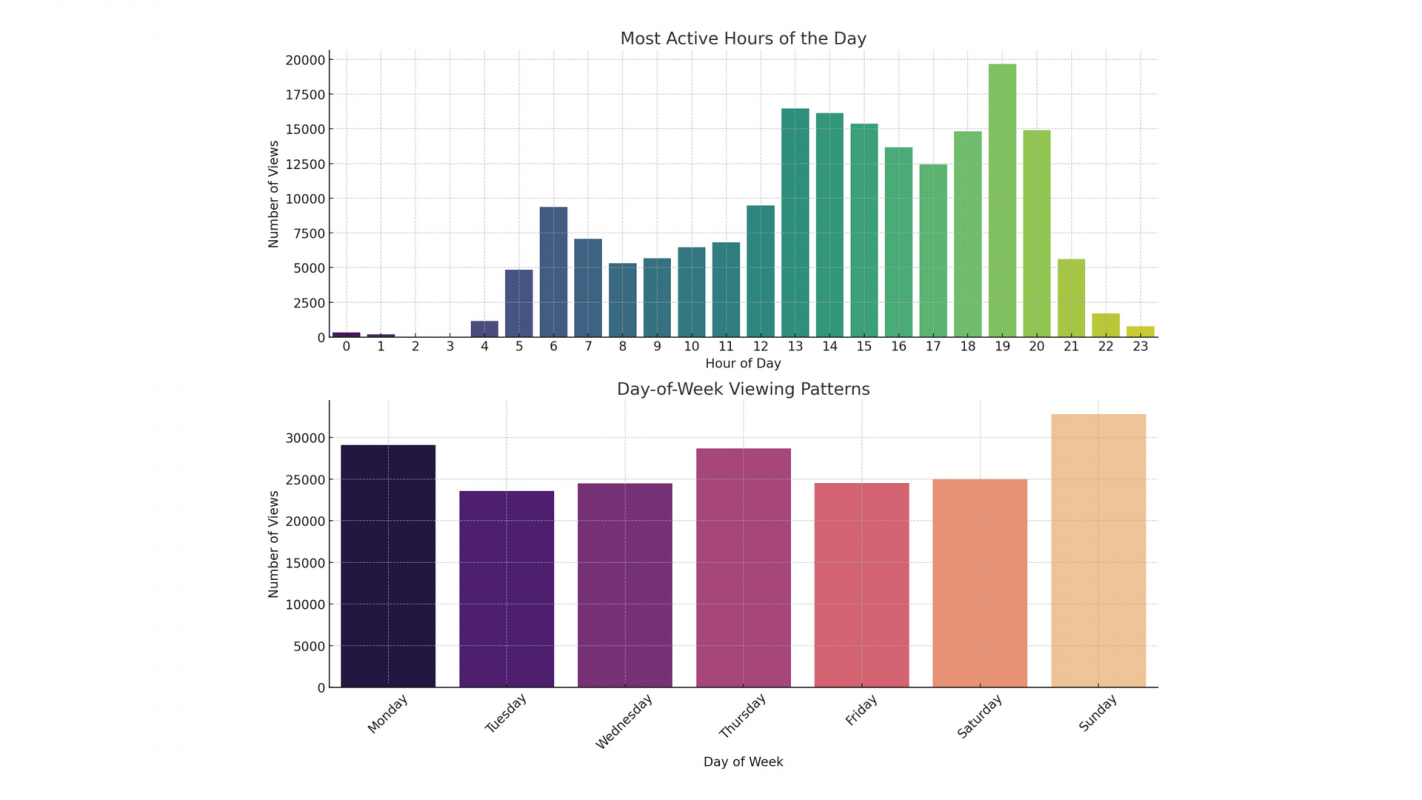

You Know What About Me? Decoding My Digital Trail Across Major Platforms

Under European rules, users can request personal data from platforms, but few do, and results are often hard to use. I accessed and parsed data from TikTok, Amazon, Google, and Instagram, uncovering surprising insights and useful tips. Find out what these platforms know about me. And maybe you too! Read the full article.

-

SREday Cologne 2025!

Just over one month to go until SREday Cologne. Slides ready, I am currently planning my short trip to one of my favorite cities in Germany to share some tips on running MySQL workloads on managed databases. As well as some mistakes I’ve made over the past decade that you can hopefully avoid. See you…

-

InfoQ – April 2025

From Firestore with MongoDB compatibility to Redis 8, from PlanetScale Vectors to Cloudflare Security Week, a recap of the news I wrote for InfoQ in April 2025. Read the full article.

-

Things Fall Apart: Navigating Managed Databases for Over a Decade as a Non-DBA

Learn to navigate database benchmarks wisely! From crashing managed instances to skyrocketing storage costs, I’ll share hard-earned lessons from a decade of managing production databases on the cloud without DBA expertise.Read the full article.