Tag: ec2

-

InfoQ – August 2021

From cloud vulnerabilities to OpenSearch, from cloud emissions to Google Cloud Private Service Connect, from EC2-Classic to Cloudwatch Cross AccountRead the full article.

-

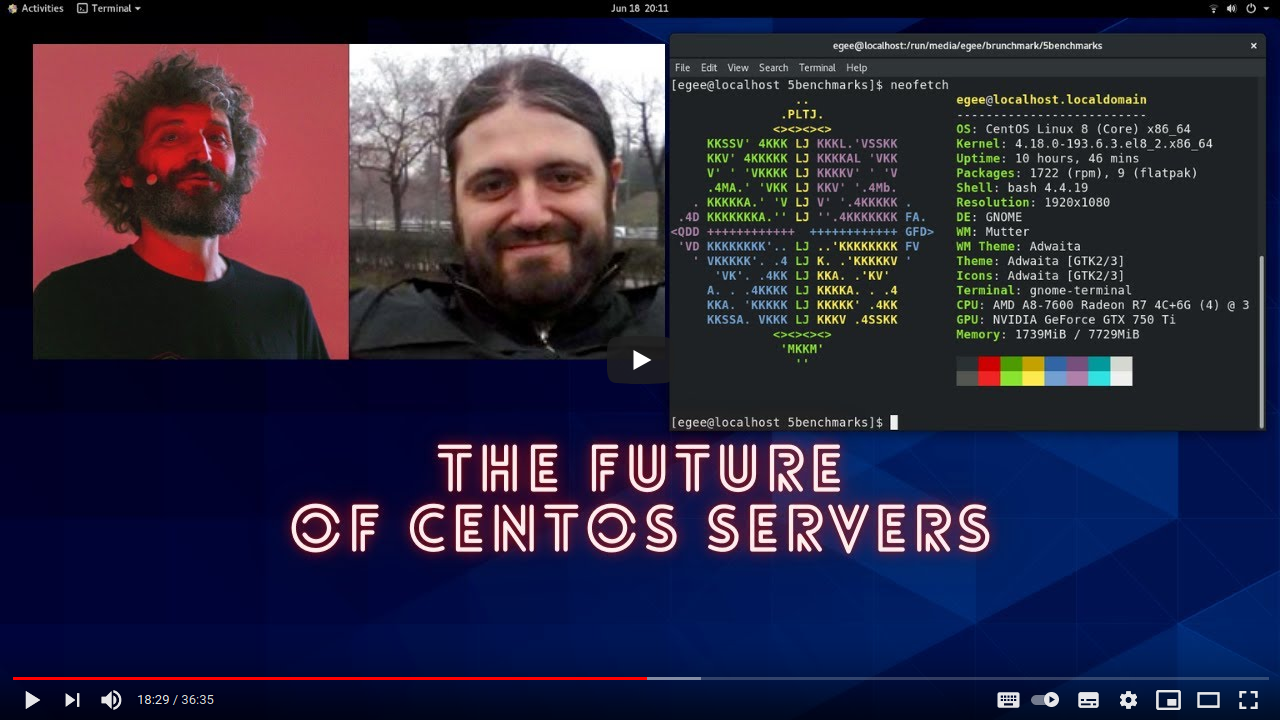

The future of CentOS and cloud deployments

Yesterday I had the pleasure to talk live with Federico Razzoli, director & database consultant at Vettabase Ltd, about theRead the full article.

-

Base performance and EC2 T2 instances

Almost three years ago AWS launched the now very popular T2 instances, EC2 servers with burstable performance. As Jeff BarrRead the full article.